Uncertainties and Differentiable Imaging

December 25, 2024 |

Ni Chen

December 25, 2024 | Ni Chen

- The Laser Allergy

- Years of Wandering

- Seeds of Understanding

- The Nature of Uncertainty

- The Dangerous Simplification

- Differentiable Revolution

- Closing the Loop

- Reflection

- References

This post is also published in Nature Research Communities. You can access it via the link.

The Laser Allergy

After completing my BS in computer science, I transitioned to optical engineering for graduate school—but my body seemed to rebel against the very environment I’d chosen. Every time I entered the lab, waves of nausea, headaches, and dizziness would overwhelm me. After months of this mysterious suffering, I reached a conclusion: I must be allergic to lasers.

The truth was less exotic but equally challenging. I simply lacked the foundational optics knowledge my peers took for granted. My advisor, Prof. Park, noticing my struggles, tried to support me by assigning more computational tasks and reducing my hands-on experimental work. But in optical engineering, experiments are unavoidable. Despite my fears and physical unease, I had no choice but to face them head-on.

The challenges compounded relentlessly. SNU’s safety protocols forbade solo experiments, the lab was a long distance from our office, and as the only woman on the team, I struggled to round up the three people usually required to oversee my work. One weekend, amid many tough moments, stands out vividly. I snuck into the lab alone, hoping to finish some measurements—but disaster struck instead. I accidentally inserted the wrong screw into the optical table: it went straight into the optical axis. The metric and inch screws had been mixed up, and no matter what I did, I couldn’t get it out. Hours of frantic effort yielded nothing. Surrounded by the lab’s black curtains, I completely broke down, sobbing alone in that dark room without having collected a single piece of data.

This was just one instance of the countless hardships I faced during those years. The cruel irony wasn’t lost on me: I’d chosen computational imaging as my research focus—a field that barely even had a formal name back then. Yet this area demanded not only computational expertise but also exceptional experimental skills. I possessed neither. Those tears in the dark lab seemed to confirm what I feared most: I had chosen the wrong path.

Years of Wandering

My struggles extended far beyond the optical bench. For years, I found myself caught between two worlds, neither of which seemed to fully accept me. In the optics community, I was the computer scientist who couldn’t align a system properly. In the computational community, I was the one obsessed with physics that others preferred to abstract away.

The search for appropriate computational algorithms to perform inverse imaging became its own odyssey. Traditional optimization methods felt disconnected from the physical reality of light. Machine learning approaches treated imaging as just another dataset, ignoring the rich physics that governs how images form. I spent countless hours implementing algorithms that worked beautifully in simulation but failed catastrophically when faced with real experimental data.

Each failure taught me something crucial: the gap between our computational models and physical reality wasn’t just a technical challenge—it was a fundamental philosophical problem. We were trying to force the messy, uncertain physical world into neat computational boxes, and the world was refusing to cooperate.

Seeds of Understanding

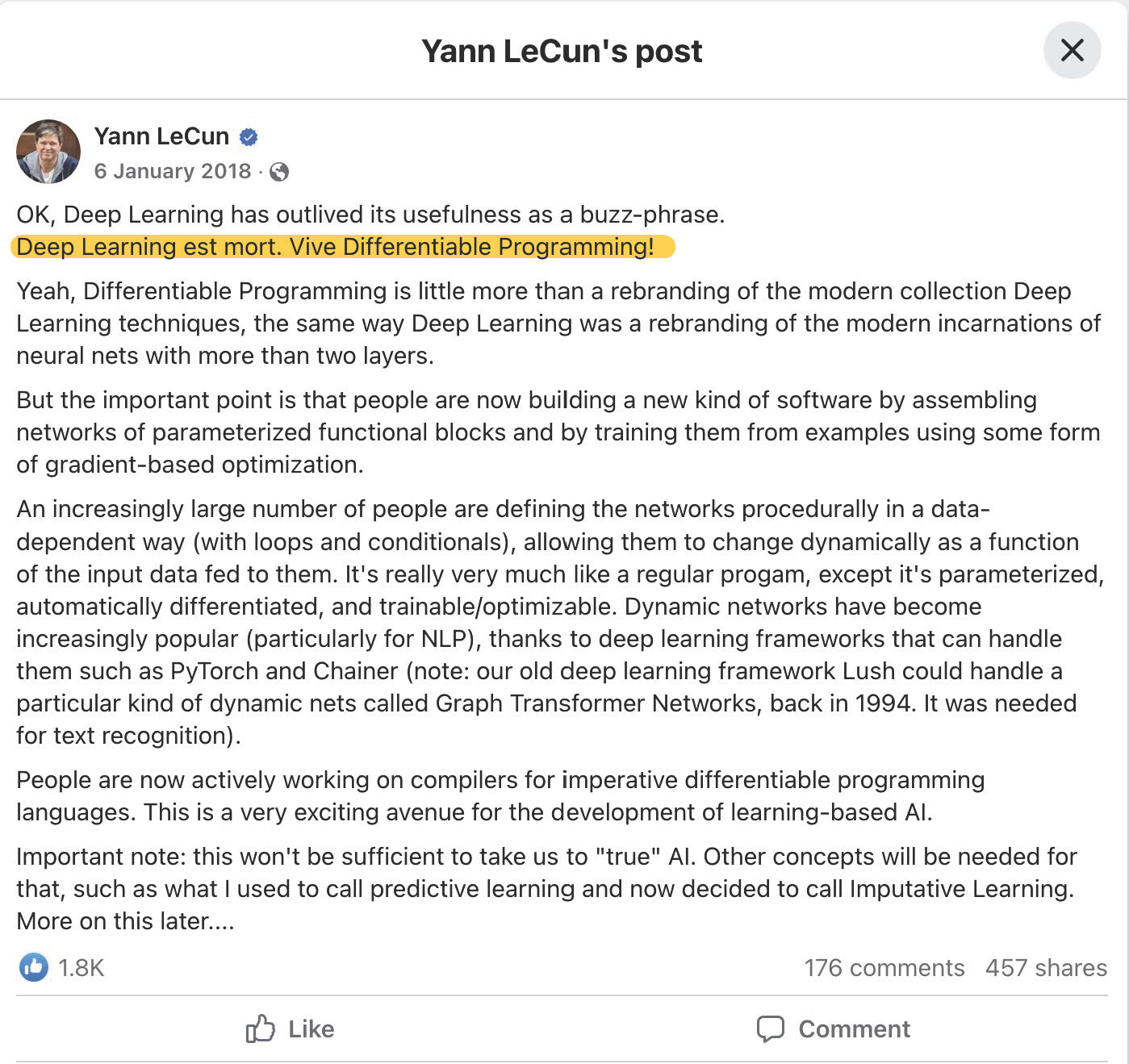

The shift began quietly. When I first explored differentiable holography1 in late 2020, my grasp of uncertainties was far shallower than it is today. Back then, the field appeared to me as little more than a clever technical trick—an approach to optimize holographic reconstructions via gradient descent. I drew inspiration from Yann LeCun’s 2018 declaration: “Deep Learning est mort. Vive Differentiable Programming!” His vision of differentiable programming as a unifying framework resonated deeply, suggesting a way to bridge the computational and physical worlds I had been struggling to connect.

|

|---|

| Yann LeCun’s post in 2018: Deep Learning est mort. Vive Differentiable Programming 2 |

It was the concept of differentiable programming that first sowed the seed for differentiable imaging3. If error feedback could move through neural networks, why not let that feedback stretch across entire computational imaging systems? Why not let error feedback through optical elements, through the physical act of light moving, and through every step of how images are formed and reconstructed?

In fact, this line of thinking opens up an even more fundamental reframing: the whole field of computational imaging can be thought of as a neural network in its own right. Just like neural networks rely on layered computations, iterative error correction, and interconnected parts to optimize outputs, computational imaging weaves together optical physics, signal processing, and reconstruction algorithms into one unified, adaptive framework. Every piece of the imaging chain—from lenses to code—works like a “layer” that transforms information, while error feedback fine-tunes the system as a whole. This bigger-picture view not only drove my early work on differentiable holography, but also made me see how deeply computational imaging aligns with the core logic of neural networks—even if I didn’t fully grasp just how far-reaching its implications would be back then.

Only between 2020 and 2022, as I delved deeper into making holographic systems differentiable, did the role of uncertainties begin to reveal itself. Each attempt to create end-to-end differentiable systems forced me to confront the same uncertainties that had plagued my experimental work years earlier. But now, instead of seeing them as obstacles, I began to recognize them as fundamental features of reality.

The Nature of Uncertainty

The revelation that transformed my perspective was both simple and profound: uncertainties are the essence of the universe. From quantum mechanics to thermodynamics, from biological systems to cosmic evolution, uncertainty isn’t a flaw in our measurements or models — it’s a fundamental property of reality itself.

This understanding completely reframed my approach to computational imaging. Traditional methods treat uncertainties as noise to be minimized, errors to be corrected, or nuisances to be eliminated. But what if we’re fighting against the very nature of the universe? What if, instead of trying to eliminate uncertainty, we designed systems that expected it, embraced it, and even leveraged it?

When an editor from Advanced Physics Research invited me to contribute to their newly launched journal, I saw an opportunity to articulate this evolving understanding. What emerged was a perspective that transformed my years of failure and frustration into the foundation for a new approach to computational imaging.

The Dangerous Simplification

As my understanding deepened, I began to observe a concerning trend within our field. Far too many researchers were ensnared by what I term the ‘reductionist fallacy’ — the mistaken belief that computational imaging is synonymous with its underlying code. This perilous equation:

\[\text{CODE} \equiv \text{COMPUTATIONAL IMAGING}\]carries profound implications that extend well beyond mere academic discourse.

This pervasive misconception illuminates a recurring challenge in computational imaging. A researcher meticulously develops an imaging algorithm, demonstrating its efficacy within their specific laboratory environment. However, when this code is subsequently shared, attempts by others to reproduce its performance in different settings frequently encounter significant discrepancies or outright failures. Such outcomes can lead to unfortunate misinterpretations, casting doubt on the original development or the inherent robustness of the code itself.

The root cause of these challenges lies in the intrinsic dependency of computational imaging code on the precise physical parameters of the system for which it was initially designed. Even subtle factors—such as minor misalignments of optical components, thermal fluctuations affecting optical path lengths, or variations in camera sensor characteristics—can introduce significant deviations from the original system’s conditions. An algorithm, meticulously optimized for a particular set of physical assumptions, may lack the inherent capacity to compensate for these changes. Consequently, an algorithm that performs optimally in one configuration might exhibit reduced efficacy or complete non-functionality in another, not due to flaws in the algorithm itself, but because the physical environment diverges from the conditions presumed during its development.

Traditional microscopists grasped this fundamental lesson centuries ago, encapsulated in a simple yet profound principle: when one alter their sample, one must invariably refocus their microscope. The physical world, by its very nature, demands constant adaptation. Yet, we often harbor the unrealistic expectation that static code can somehow transcend this fundamental reality. The uncomfortable truth is, it cannot. Genuine computational imaging necessitates a profound comprehension of both theoretical and practical domains — not merely the ability to craft elegant algorithms, but a deep understanding of how light truly propagates through imperfect optics, how real-world sensors inevitably deviate from idealized models, and how uncertainties can cascade throughout an entire system.

Herein lies an unexpected twist. The very complexity that often engenders such frustration might, paradoxically, offer a path toward its own resolution. The inherent inseparability of system and code in computational imaging actually provides a natural safeguard for intellectual property. Unlike pure software, which can be effortlessly copied and deployed universally, computational imaging solutions remain inextricably linked to their origin. The most exquisitely designed algorithm becomes merely an aesthetic embellishment without the specific optical design it was conceived for, the precise calibration procedures that enable its optimal function, and the profound understanding of physical constraints that informed every line of its development. In a sense, this intrinsic connection protects years of hard-won knowledge—one cannot simply acquire the code and expect it to perform as intended.

But what if we could fundamentally transform this limitation? Imagine those same researchers, who once leveled accusations of irreplicability, now empowered to deploy imaging systems that automatically adapt to their unique laboratory setups. Picture code that transcends static instructions, evolving into a living system capable of learning and optimizing its performance within each distinct physical context. This paradigm shift would transmute computational imaging’s apparent vulnerability—its dependence on physical specifics—into its most formidable strength. Instead of demanding that every user master both the intricacies of optics and the complexities of computation, future imaging systems could autonomously observe their own behavior, quantify their inherent uncertainties, and self-optimize for whatever real-world conditions they encounter. The very algorithm that might catastrophically fail in traditional, rigid approaches could, through this adaptive intelligence, discover how to succeed in virtually any reasonable physical configuration. This would profoundly democratize access to advanced imaging techniques, liberating researchers to concentrate on their core scientific inquiries rather than grappling with system-specific implementations.

My own years of arduous struggle had indelibly etched this lesson into my understanding. Every misaligned component that sabotaged a day’s work, every measurement that stubbornly defied simulation, every algorithm that functioned flawlessly in theory but faltered in practice — each served as a visceral reminder that the physical world steadfastly refuses to be neatly confined within our pristine computational models. The uncertainties I repeatedly encountered were not flaws in the system, but rather inherent features of reality itself. I now recognize that differentiable imaging possesses the transformative potential to convert this hard-won understanding into robust systems that can spare others from enduring the same arduous learning process, thereby forging a future where computational imaging truly serves the needs of all who seek its power.

Differentiable Revolution

The path forward crystallized as I continued developing differentiable holography and began to see its broader implications. Differentiable programming wasn’t just about optimizing neural networks—it was about creating a language that could describe both physical and computational processes in a unified framework. This realization sparked the broader vision of differentiable imaging.

Since 2020, this journey has taken me across three countries / four regions, where I’ve had the privilege of meeting wonderful scientists from diverse communities. Each encounter brought unique inspirations. These cross-pollinations of ideas from different cultures and disciplines enriched my understanding immeasurably, showing me how the same fundamental principles could be viewed through wonderfully different lenses.

|

|---|

| 2024 Nobel Prize in Physics |

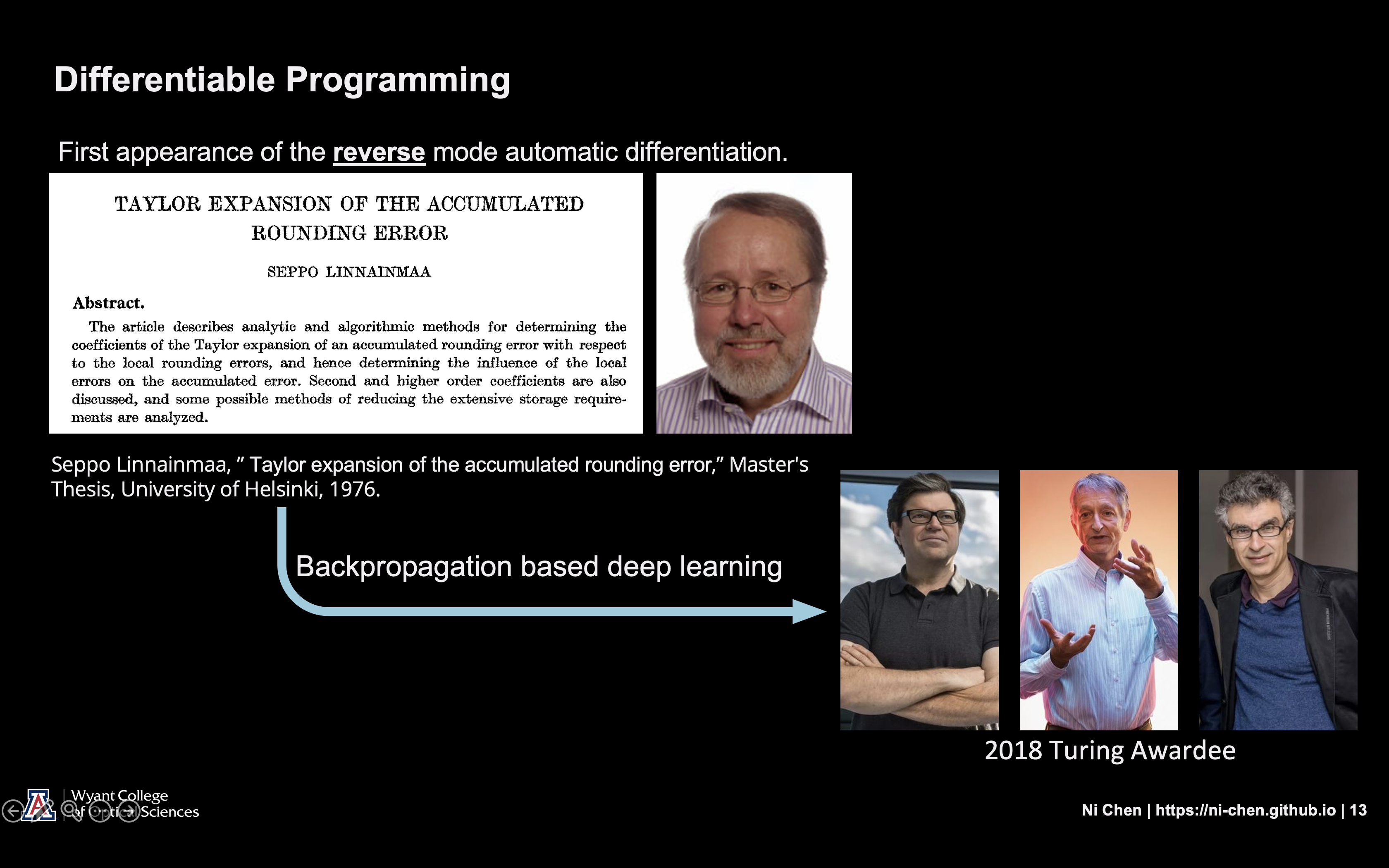

When the 2024 Nobel Physics Prize was announced recognizing Geoffrey Hinton’s work, I experienced a moment of delightful recognition. As I watched the news coverage, Hinton’s face seemed strangely familiar. Then it hit me: I had been using his picture in my presentation slides since 2023! There it was, in my slide explaining how deep learning was a special case of differentiable programming. The recognition felt like a cosmic validation—here was the work I had been citing to explain our foundational concepts, now honored with science’s highest award.

|

|---|

| One of the slides from my iCANX Youth Talk in April 2023 4 |

This moment brought everything full circle. Differentiable imaging represents the natural evolution of these ideas—not just applying gradients to optimize parameters, but creating systems where information flows seamlessly between physical and computational domains. The Nobel recognition validated what many of us had been believing: that the fusion of computation and physics wasn’t just a technical trick, but a fundamental new way of understanding and interacting with the world.

Closing the Loop

Differentiable imaging represents more than just another optimization technique. It creates a closed loop between physical reality and computational models, enabling systems that learn from every photon, every measurement, every uncertainty. Instead of treating noise and variations as enemies to be defeated, these systems incorporate them as information to be processed and understood 5671.

This approach transforms imaging from a one-way capture process into a dynamic conversation between the system and its environment. A differentiable imaging system can optimize its optical elements based on computational feedback, adjust its algorithms based on physical constraints, and quantify its uncertainties to make informed decisions about what it can and cannot resolve.

I must emphasize that differentiable imaging research is still in its early stages. We’re at the beginning of what I believe will be a transformative journey for the entire field. The frameworks are still being developed, the tools are still being refined, and the full potential remains largely unexplored. Yet even in this nascent stage, I’m convinced that differentiable imaging offers solutions to many of the fundamental issues that have plagued computational imaging research for decades. It addresses the rigid separation between optics and computation, the inability to adapt to changing conditions, the challenges of uncertainty quantification, and the barriers between different imaging modalities.

The beauty of this framework lies in its philosophical alignment with the nature of reality itself. By acknowledging that uncertainty is fundamental, not incidental, we stop fighting against the universe and start working with it. Whether we’re designing microscopes that adapt to biological samples, cameras that compensate for atmospheric turbulence, or medical imaging systems that account for patient movement, the principles remain the same: embrace uncertainty, make things differentiable, and let the system learn.

Looking forward, our recent papers explore how this approach8, combined with concepts like digital twins, could create truly autonomous imaging ecosystems. These systems wouldn’t just capture images—they would understand their own limitations, predict potential problems, and optimize themselves for each unique scenario. While we’re only scratching the surface of what’s possible, the early results suggest a future where imaging systems are as adaptive and intelligent as the researchers who use them.

Reflection

As I write this, I can’t help but think back to all those moments of frustration—not just that weekend with the stuck screw, but the years of feeling caught between communities, of algorithms that wouldn’t converge, of experiments that wouldn’t align. Each failure contained a lesson about the fundamental nature of uncertainty in our universe.

My “laser allergy” was real, just not in the way I imagined. It was an allergy to the rigid, deterministic view of imaging systems that pretends uncertainties don’t exist. The journey from those early struggles to differentiable imaging has been one of learning to embrace what I once tried to avoid.

By making systems differentiable and adaptive, we’re not just solving technical problems. We’re aligning our technology with the fundamental nature of reality—a reality where uncertainty isn’t a bug but a feature, where adaptation isn’t optional but essential, and where the most profound insights often emerge from our deepest struggles.

The tears in that dark lab have transformed into a vision for systems that expect the unexpected, that learn from chaos, and that find clarity not by eliminating uncertainty but by embracing it as the essence of the universe itself.

References

-

Ni Chen, Congli Wang, Wolfgang Heidrich, “∂H: Differentiable Holography,” Laser & Photonics Reviews, 2023. ↩ ↩2

-

Yann LeCun’s Facebook post: https://www.facebook.com/yann.lecun/posts/10155003011462143 ↩

-

Ni Chen, Liangcai Cao, Ting-Chung Poon, Byoungho Lee, Edmund Y. Lam, “Differentiable Imaging: A New Tool for Computational Optical Imaging,” Advanced Physcics Research, 2023. ↩

-

iCANX Youth Talks Vol.8: https://ueurmavzb.vzan.com/v3/course/alive/754913272 ↩

-

Ni Chen, Yang Wu, Chao Tan, Liangcai Cao, Jun Wang, Edmund Y. Lam, “Uncertainty-Aware Fourier Ptychography,” Light, Science & Applications, 2025. ↩

-

Ni Chen, Edmund Y. Lam, “Differentiable pixel-super-resolution lensless imaging,” Optics Letters, 2025. ↩

-

Yang Wu, Jun Wang, Sigurdur Thoroddsen, Ni Chen*, “Single-Shot High-Density Volumetric Particle Imaging Enabled by Differentiable Holography,” IEEE Transactions on Industrial Informatics, 2024. ↩

-

Ni Chen, David J. Brady, Edmund Y. Lam, “Differentiable Imaging: progress, challenges, and outlook,” Advanced Devices & Instrumentation, 2025. ↩